Too late now

Earlier this month, Facebook’s algorithm flagged 21 posts from the Auschwitz Museum as violating community standards — and now parent company Meta is eating crow.

In a Facebook post, the Poland-based memorial said that after Meta’s content moderation algorithm moved some of its posts down the feed due to strange claims of violating community standards, the company has apologized, but not directly , for the mistake.

“We mistakenly sent a message to the Auschwitz Museum that several content the museum had posted had been downgraded,” a Meta spokesperson said. The Telegraph. “In fact, that content does not violate our policies and has never actually been demoted. We sincerely apologize for the error.”

In an April 12 post, the museum announced the erroneous flags and accused the social network of “algorithmically erasing history.”

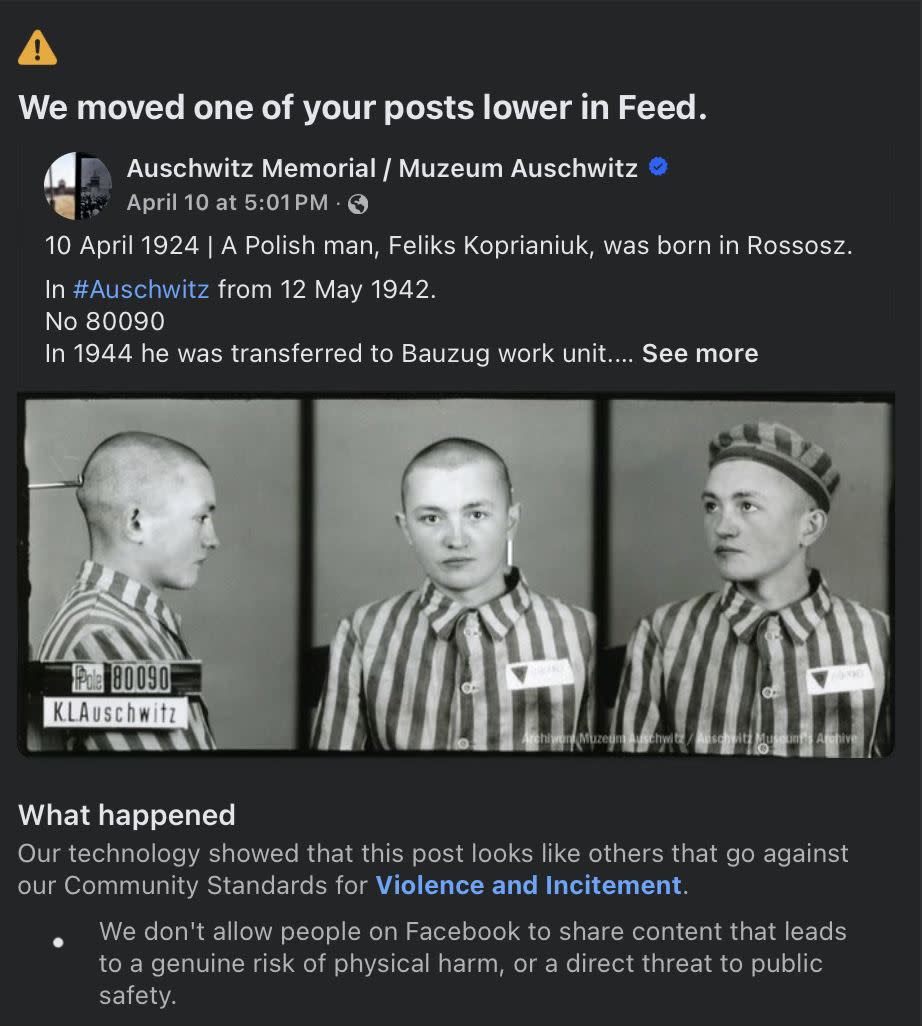

“The posts, which serve as tributes to individual victims of Auschwitz, have been wrongly targeted by this platform’s content moderation system and ‘placed lower in the feed’, citing absurd reasons such as ‘nudity and sexual activity among adults’ , ‘bullying and intimidation’. ,” “Incitement to hatred” and “Incitement to violence,” the message reads.

As screenshots show, none of the messages in question had such content, but instead showed portraits of Auschwitz victims and brief descriptions of their lives and identities prior to their murders at the hands of the Nazis.

Common problem

Although the flags have since been withdrawn, many are sounding the alarm about how this kind of AI-powered system is keeping people out of control of important messages.

Shortly after the museum unveiled the flags, Poland’s Digital Minister Krzysztof Gawkowski vandalized the site for such a blatant error, calling it a “scandal and an illustration of problems with automatic content moderation” in a translation of his article . post on X-formerly Twitter.

Gawkowski’s demand for Meta to explain herself further was echoed by the Campaign Against Anti-Semitism, which said in a statement: The Telegraph that the company’s apology did not go far enough.

“Meta must explain why its algorithm treats the real history of the Holocaust with suspicion,” the representative told the British newspaper, “and what it can do to ensure these stories continue to be told and shared.”

It’s bad enough that Meta’s content algorithm flagged such important historical information as problematic – but within the context of the other major AI moderation problems, including automatically translating ‘Palestinian’ to ‘terrorist’ and allegedly promoting pedophile content , this is a special insult. .

More about Meta-AI: Meta’s AI tells users it has a child